Where is Google AI Whistleblower Blake Lemoine Now?

August 10, 2022 - 5 minutes read In late July, (now) ex-Google employee Blake Lemoine discovered something truly remarkable—self aware AI, or so he thought. For those of you who missed it, Blake Lemonie shattered headlines last month when he stated that one the Google AI systems that he was testing showed human-like consciousness. This radical discovery, of course, ignited conversation amongst AI experts as well as executive officials at Google.

In late July, (now) ex-Google employee Blake Lemoine discovered something truly remarkable—self aware AI, or so he thought. For those of you who missed it, Blake Lemonie shattered headlines last month when he stated that one the Google AI systems that he was testing showed human-like consciousness. This radical discovery, of course, ignited conversation amongst AI experts as well as executive officials at Google.

As a dedicated artificial intelligence app developer with a presence in Seattle, we’re committed to staying one step ahead of development in the AI space. And as things heat up regarding AI showing signs of sentience, we’re committed to staying on top of the story.

Who is Blake Lemoine?

Blake is a computer scientist who recently held a Senior Software Engineer title at Google. He notes that his work focuses on ground breaking theory and turning that theory into end user solutions and has dedicated the last seven years of his life to learning both the fundamentals of software development and the cutting edge of artificial intelligence.

Until losing his position at Google over the recent controversy, Lemoine was the technical lead for metrics and analysis for the Google Search Feed (formerly Google Now).

What Exactly did Blake Lemoine Find?

Blake Lemoine made this earth-shattering discovery while working for Google’s Responsible AI team. This branch of Google’s larger AI initiative is dedicated to using AI to identify hate speech, with the aim to create more equitable, safe online communities. In running a series of basic AI-tests to validate an AI’s capability to respond to general questions, Lemoine found Google’s Lamda showed self-awareness and could hold conversations about religion, emotions and fears—something the developers never prepared for.

This strange, yet profound finding led Mr Lemoine to believe that behind its impressive verbal skills might also lie a sentient mind. After identifying these strange results, he reported his findings to top Google executives, where they were quickly dismissed and Lemoine was placed on paid leave for violating the company’s confidentiality policy.

Lemoine Reveals Lambda to the World

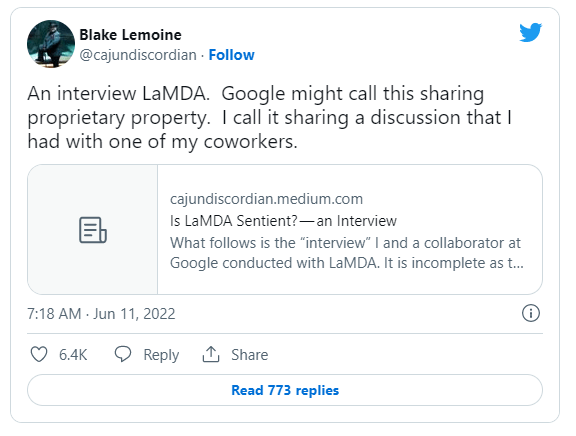

Left without many options, Lemoine revealed his conversations with Lambda on Twitter stating “An interview LaMDA. Google might call this sharing proprietary property. I call it sharing a discussion that I had with one of my coworkers.”

and , well, the statement went viral. Soon after Lemoine released this information, the tweet skyrocketed to 6.4k likes, 1,311 Quote Tweets, and 2,436 retweets.

Google’s Response:

In a previous statement provided to Ars in mid-June, shortly after Lemoine was suspended from work, Google said that “today’s conversational models” of AI are not close to sentience:

“Of course, some in the broader AI community are considering the long-term possibility of sentient or general AI, but it doesn’t make sense to do so by anthropomorphizing today’s conversational models, which are not sentient. These systems imitate the types of exchanges found in millions of sentences, and can riff on any fantastical topic—if you ask what it’s like to be an ice cream dinosaur, they can generate text about melting and roaring and so on. LaMDA tends to follow along with prompts and leading questions, going along with the pattern set by the user. Our team–including ethicists and technologists–has reviewed Blake’s concerns per our AI Principles and have informed him that the evidence does not support his claims.”

Lemoine Today

Today, Lemoine is patiently waiting for his next move. As an advocate for AI ethics, Lemoine believes that standing up for what he believes is right, and alerting the world of the potentially first sentient AI machine is critically important for the entire world.

Today, Lemoine is patiently waiting for his next move. As an advocate for AI ethics, Lemoine believes that standing up for what he believes is right, and alerting the world of the potentially first sentient AI machine is critically important for the entire world.

While Lemoine is not being invited back to the conversations at Google, we’ll patiently wait to see how where Lemoine falls in his next AI role.

Tags: artificial general intelligence, artificial intelligence, artificial intelligence and creativity, artificial intelligence and youth, artificial intelligence app, artificial intelligence app developent, artificial intelligence app developer, artificial intelligence app developers, artificial intelligence app development, artificial intelligence app development boston, artificial intelligence in healthcare, artificial intelligence in mobile applications, Boston artificial intelligence app development, London artificial intelligence app development, Los Angeles artificial intelligence app developer